In the rapidly evolving landscape of Web3, ensuring the sustainability of tokenomics projects is essential. This article outlines a comprehensive process for assessing the economic viability of Web3 platforms of any type, such as games, incentive systems or other applications. By following this methodology, teams can systematically assess their project’s strengths and weaknesses, identify potential risks, and implement strategies to mitigate them.

In this article

- Process Overview

- Step 1: Perform Preliminary Analysis

- Step 2: Build Machinations models

- Step 3: Simulate Scenarios

- Step 4: Assess risks

- Final Remarks

Process Overview

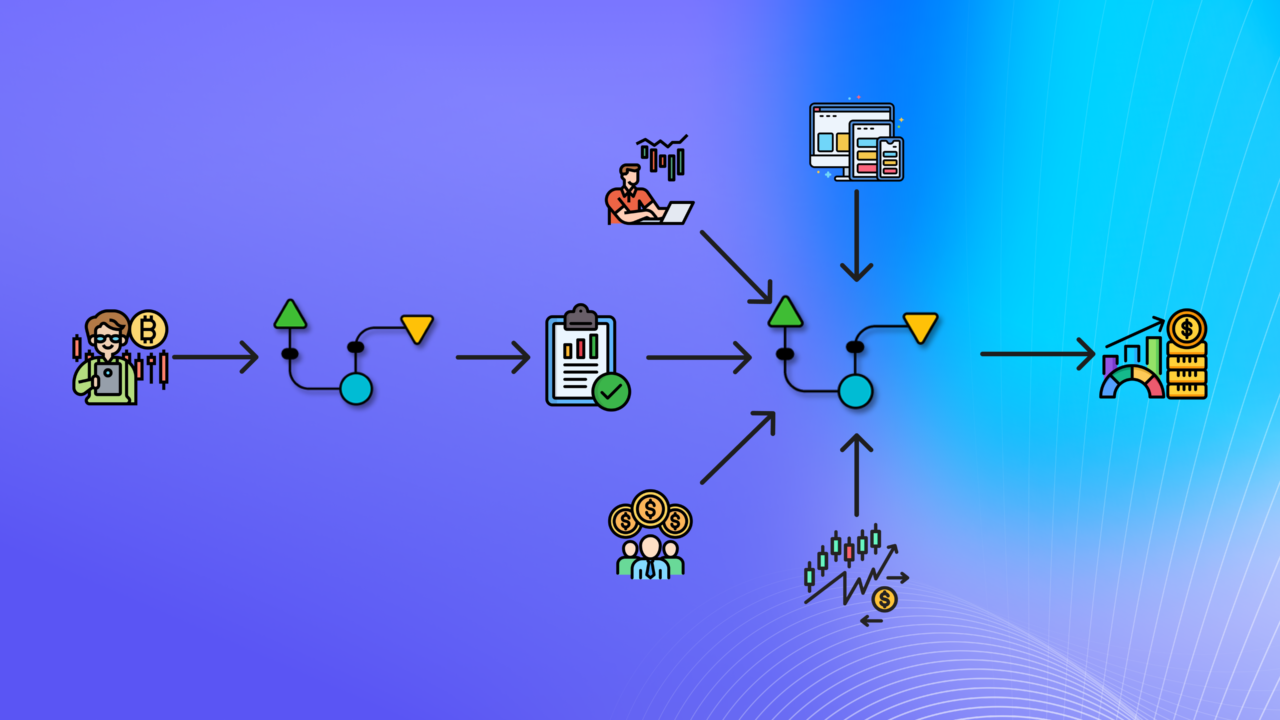

In order to assess the sustainability of a Web3 project, several steps are required to evaluate and mitigate the risks that may materialize. In Machinations, the Web3 assessment process evaluates the project’s compliance with the three pillars of good economy design: currency stability, price rationality and proper allocation. For more details, please refer to the article The Machinations Manifesto For Building Sustainable Game Economies – The Design Pillars.

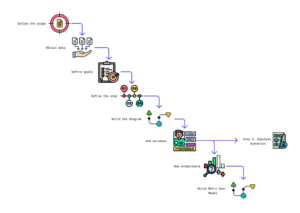

This diagram illustrates an outline of the main steps of the process, which is not necessarily linear.

The stages of the process and their order depend entirely on the project requirements and on the KPIs that need to be measured. In some cases, it might be required to return to a previous finished stage due to changes or corrections that have to be made along the process.

Step 1: Perform Preliminary Analysis

Initially, it is necessary to gather information that will be used to define the scope of the assessment, hypotheses, goals and any features that are part of the project.

Project Goals

The team has to describe the most relevant goals that the economy must meet for it to be considered sustainable. These goals must be SMART: specific, measurable, attainable, relevant and time-based.

This is one of the most important aspects to define as it will be converted into KPIs that will be estimated by running predictions in Machinations. If the results do not match the preset goals, then there may be a risk in the economy which has to be mitigated.

Some examples of these goals are:

- The price of the token has to be in a specific range over a period of time

- The circulating supply of the token cannot exceed a certain amount

- NFTs cap for a defined rarity cannot be higher than a predetermined maximum

Design Documents

Every project should have a document that illustrates the features of the platform, such as a Game Design Document, a Whitepaper or a Game Guide. These documents should include the following information:

Tokens

- Type: such as In-game Currencies, Utility Tokens, Governance Tokens, Non-Fungible Tokens (NFTs)

- Taps/Sources: how users earn the token, how much it costs to obtain it and where the token comes from

- Sinks/Drains: how the user can consume the token, what they get in return and what the team does with these tokens

Features/Game Mechanics

- Triggers: how and when the features are activated, if they are a consequence of a direct interaction of the user or a condition from the current state of the application

- Conditions: everything that the user needs to use the feature. This may include payment of some sort of token

- Operation: a description of how the feature works, what it does after it triggers and how it decides on the rewards or penalties

- Rewards/penalties: description of how the feature affects the overall economy, that is, if the user wins or loses something in return

Tokenomics Documents

Every Web3 project should provide a Tokenomics document, which provides a thorough overview of the economic model and financial structure of the project. Some of the most important components this document must include are:

- Tokens with their description

- Token distribution and allocation

- Token supply

- Tokens vesting and release schedules

- Staking with their corresponding rewards and lock periods

- Liquidity pools and AMMs

- Sources of income of the project and investments

- Transaction fees and how these fees are used by the team

Scope

Modeling the entirety of the platform may be very hard or it might not even be relevant. Therefore, it is necessary to define a scope of the assessment considering the following rules:

- Address only the features that are already or are going to be implemented, as long as their design is stable

- Prioritize the mechanics considering the project roadmap and priority of the tasks

- Define what Machinations models will be created and which features they will contain depending on the necessary KPIs

- Only focus on the features that have either a direct or indirect impact on the economy of the system

Assumptions

Several hypotheses have to be made in the model due to a lack of information or basically to simplify the model. The accuracy of the results will be affected by the number of assumptions and the errors that they may imply. However, it is necessary to compare the results of simulations with the results that were estimated by the team to check if these assumptions are correct or need to be tweaked to a certain extent.

The following rules should be considered when defining assumptions for the models:

- Every conjecture should be documented and agreed with the team

- Every variable or constant whose value is unknown by the team should be assumed

- The order and priority given to the actions performed by users should be either defined by the team or assumed

It is important to maintain a list of each assumption so as to track and follow up any change in the economy or the game design document. The following spreadsheet can be used as a template for this type of data.

Assumptions for Simulation Models

Personas

For the purpose of understanding how all actors that interact with the platform affect the economy of the project, they must be classified into personas, which are behavioral archetypes that represent a group of users. Personas are created to understand the behavior, goals, motivations and needs of a specific cohort of users, all of which is necessary to analyze how they will interact with the platform under different scenarios.

In Web3 projects, personas can be generated in many ways:

- From the platform-specific roles: based on the documentation provided by the team, users can already be classified into personas depending on the features. For example, for a learning platform it is possible to already define three personas: students, instructors and course managers.

- Defined by the project team: the team may already have gathered data regarding what personas are necessary to include in the assessment

- Generic player personas: in the case of Web3 games, players can be classified into Investors, Earners and Players, as explained in this article by Naavik “The 9 Types of Blockchain Gamers”

Step 2: Build Machinations models

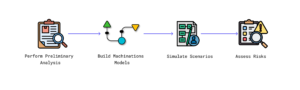

We can look at the platform that is subject to the assessment as a blackbox: different actors interact with the platform which, as a consequence, has an effect on the economy.

The platform and its economy can be thought of as a system, made up of several interdependent components that react to the input of the actors. As a result, it is possible to model and simulate the platform in Machinations, where the inputs can be translated into components being triggered under certain conditions, and the KPIs can be obtained by running predictions and estimating values of pools and registers. Typically, two types of diagrams are built to model the entire platform: Single User Models and Multi User Models.

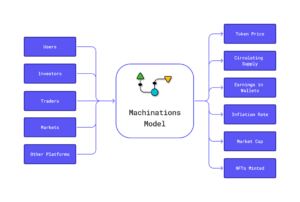

Single User Models

The purpose of these models is to represent how one user of a specific persona interacts with the platform. These interactions can be through features or game mechanics depending on the project type, and will have an effect both on the user’s economy and the entire ecosystem.

Below is a sample Single User Model for a hypothetical Web3 game where players participate in PvP matches, earn tokens and use these tokens to purchase NFTs which will increase their winning chances.

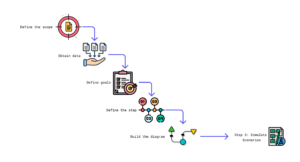

How to build single user models

Creating single user models can be an iterative process, albeit it generally starts by outlining the goals and specificities of the model in order to build it accurately and obtain the required results.

1- Define the scope

Specify which features will be modeled. This needs to be locked during building because adding more features in the meantime may add overhead to the process.

2- Obtain data

For each feature it is required to know who triggers the features and under what conditions this happens; also, if the user needs to pay any cost and what the rewards or effects of the feature are on the economy.

3- Define goals

List all the KPIs that need to be estimated for a single user, such as tokens rewarded, earnings in wallet or NFTs minted.

4- Define the step

The step is the increment of time in the simulation and it depends entirely on the data gathered for the features and the goals or KPIs that need to be measured. There are two methods for determining the step:

- Fixed-increment time advance: the increment of time is constant, and is given in Δt time units. That is, one step can be one day, one month, two weeks, or any other increment measured in time units. In one single step, many events can happen, such as a user trading tokens, minting NFTs or using their tokens

- Next-event time advance: the increment of time is event by event. The simulation has this type of increment when the times between successive events are not fixed. For instance, we know that a player takes from 1 to 10 minutes to finish a match in a battle royale game. As the time is not fixed, then the step is every time a match is finished.

5- Build the diagram

In order to build the diagram, you should work on each of the main features separately and connect them when necessary. The Single User Model consists of inputs, outputs and subsystems which represent each of the most relevant features.

Inputs

The platform will receive different inputs from users and external actors. These actors may represent markets, investors, other users or even other platforms, and they need to be considered only if they have an impact on each feature. To accurately model the inputs, the following rules should be considered:

- Every action made by users or external actors has to be represented as a component that is triggered on a specific condition

- Do not use interactive nodes as they will not be activated when executing predictions

- If there is not enough information about when an action is activated, then they should be enabled with a certain probability. For example using random gates, which will later be replaced with data from player personas or live data

- Data and other assumptions should be calculated using random intervals

Subsystems

The subsystems that will be modeled depend on the features of the platform, particularly on those that were described as part of the scope analysis. Some examples of subsystems are:

- Features that reward tokens: this includes all activities that provide in some way tokens or other types of currency, and should be considered because it has a direct impact on the economy of the project. Playing game modes such as PvP or PvE are an example of this case as it gives players rewards based on their outcomes

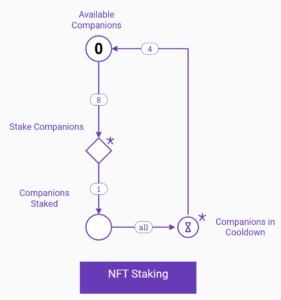

- Staking: this covers situations where users can stake their tokens to get rewards after a certain time, to increase the amount of rewards they will earn or to increase the chance of earning tokens

- NFTs upgrades and alterations: in some platforms users are allowed to modify their NFTs, either as an upgrade or as a change in their attributes. This can affect the economy in many ways: tokens may be required to perform this transaction, the upgraded NFTs may yield more rewards for users or NFTs may be burned as a result

- Tokens sales and purchases: both fungible tokens and NFTs can be sold or bought in different situations and as a consequence of the state of the platform. Generally, this should be added in the Multi User Model, albeit it might be necessary to keep track of each individual transaction

Outputs

The Single User Model needs to return a series of data through pools or registers, which, if necessary, will be used as input for the Multi User Model.

The following is a list of typical outputs required from the Single User Model and some recommendations:

- Tokens issued: you should accumulate in a separate pool all of the tokens that were earned by the user to calculate the circulating supply

- Tokens spent: keep all of the tokens that were spent by the user in a dedicated pool. It is not recommended that you use drains to represent expenses because you may lose track of where these tokens go. Also, if you use converters to represent a case where a user spends tokens to obtain something in return, keep track of the tokens that were spent

- NFTs issued: always keep track of the NFTs that were minted or upgraded by the user in separate pools. This is because it may be necessary to calculate and control the circulating supply of NFTs so that they do not exceed the cap

- NFTs burned: it is also necessary to control in a different pool all of the NFTs that were burned and therefore removed from the economy. This may have happened either because the NFT was burned under a specific condition or because the user upgraded or used the NFT to mint another one.

6- Add Personas

To add user behavior to the model, it is suggested that you follow the next guidelines:

- List all inputs from the user in the Single User Model. For instance, logging in the platform, upgrading an NFT or deciding to exchange a currency into a token.

- List all of the personas you have already defined in the preliminary analysis

- For each persona, define the probability of performing each action or the conditions under which these actions may activate

- Create a Google Sheet with a table containing a row for each action, a column for each persona and the probabilities or values associated with each combination

- Create a Google Sheet custom variable in Machinations to read the values from the Google Sheet

- Modify the actions so that the model reads the probability of performing them from the custom variable

Next is a link to the template with some examples on how to describe personas, listing personas as columns and actions as rows. As a consequence, each cell represents a persona-action combination with a value which represents any mathematical expression that can be added in Machinations.

7- Run predictions

Once the diagram has been finished, or at least an initial version, one of the following steps has to be taken:

- Run predictions on the Single User Model: if it is not required to perform a Multi User analysis, it is possible to execute simulations on this model, going directly to Step 3: Simulate Scenarios

- Run predictions and feed them into the Multi User Model: if the Multi User Model is required, store the results such as in a CSV file so that you use them as inputs for the Multi User Model.

In any case, it is recommended that you run as many predictions as possible (with a minimum amount between 30 and 50) to have a more accurate representation of the KPIs that need to be estimated.

Best practices

It is recommended to follow these guidelines when working on Single User Models:

- Build this model only if it is necessary to estimate and extrapolate KPIs from the interactions between one user and the platform

- Only model the features that have a direct or indirect effect on the economy of the platform, especially on fungible and non-fungible tokens

- All currencies that have an impact on the economy need to be represented in the model. However, if currencies have different attributes, it would be best to group them into a few to simplify the model

- If possible, treat each feature as a black box showing at least the inputs and outputs. It is better to have a simple diagram, as details are only necessary if they are of interest for the team

- When different NFTs with many attributes can be minted or bought, either keep track of a limited number of NFTs with their attributes, or group all NFTs as a single resource and calculate an average for their attributes. It is generally recommended to perform the latter as it is more flexible, although it may be complex to group NFTs in terms of their attributes

- If a feature is too complex to add to a complete single user model, but has to be assessed, create a separate model to focus on it. Afterwards, you can run predictions and use the results to input them in the more comprehensive Single User Model.

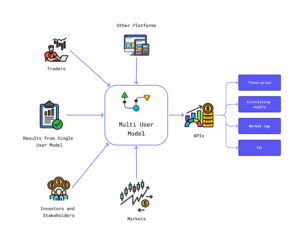

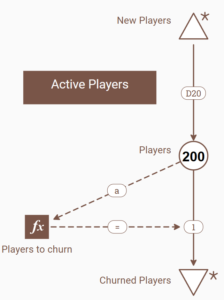

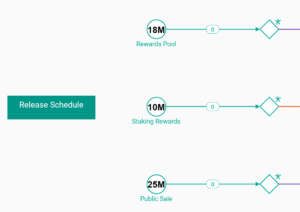

Multi User Models

The objective of these models is to simulate how the interactions between all actors and the platform affect the principal tokenomics KPIs, such as treasury, tokens prices, circulating NFTs and liquidity pools. Had the Single User Model been created, the results of the predictions run on this model should be added as an input to the Multi User Model.

Next is a sample Multi User Model for the same hypothetical game of the previous section. This diagram focuses on how players and investors can affect the token price as a result of staking and trading with an Automated Market Maker.

How to build Multi User Models

Creating Multi User Models is quite similar to the Single User Models building process, with a caveat: either personas should already be evaluated during the Single User Model creation stage or it will be added in the Active User subsystem of the Multi User Model.

1- Define the scope

It is required that you enumerate what aspects of the tokenomics will be modeled, such as staking, DEXs, CEXs, liquidity pools and token vesting.

2- Obtain data

For each action carried out by all actors, it is necessary to know when these actions are triggered and under what conditions.

3- Define goals

List all the KPIs that need to be estimated to analyze the sustainability of the project and what their acceptable values are for the project’s team.

4- Define the step

The step may be the same as the one described in the Single User Model but it may depend on other factors such as the frequency required for the results or the data obtained from the previous model. For instance, if the step defined in the Single User Model is 1 hour, you can aggregate the data obtained into days and obtain a daily average to be input in the Multi User Model.

5- Build the diagram

As in the previous model, we need to think about Multi User Models in terms of inputs, subsystems and outputs.

Inputs

There are two types of inputs for a Multi User Model:

- Data gathered for all users and not considered in the Single User Model: for instance, you may not need to model trades in the Single User Model, but in the current model it may be necessary because you need to estimate the average price

- Data gathered from results of predictions in the Single User Model: most KPIs obtained in the previous model can be fed into this diagram. For example, the predictions run in the Single User Model may have returned the average of in-wallet tokens for all segments. This average can be used to calculate the average of tokens in circulation for the entire user base.

Subsystems

Typically, Multi User Models are comprised of these subsystems:

- Active Users: it is necessary to calculate the amount of Active Users to know how many users of each segment are present and estimate the circulating supply, trades and any other relevant KPIs. It is required to know the estimated number of users at the start and the curves or estimated values for user growth and user decay at every step or at predetermined conditions. It can also be important that you classify or distribute all users among each persona, either by using gates to distribute users to each persona with a certain probability, or using registers to calculate what portion of the users belong to what persona

- Liquidity pools: if the token can be traded on Decentralized Exchanges then their corresponding liquidity pools have to be modeled to estimate the price of the token. The liquidity pool will be affected by different user actions like tokens purchases, tokens sales, adding liquidity and removing liquidity.

- Circulating Supply: to calculate the circulating supply of the token, you have to estimate the total active users, the number of tokens minted or rewarded to players and the number of tokens burned or received from players. This might need to be segregated by segment or persona, as each one will have different behaviors.

- Rewards: some of the project tokens are allocated to reward players if they perform a certain action. These tokens should be taken from a pool representing the Rewards Allocation to keep track of the reserves in the treasury and how it evolves over time.

- Staking: in some projects, users can stake their tokens and/or NFTs to gain some advantages like having voting rights or even earning staking rewards. Generally, staked tokens are represented as Delays in Machinations, as they will be locked until the staking period has finished. This needs to be modeled because staking has an effect on several KPIs such as circulating supply and TVL.

- Token Vesting: all tokenomics projects have two factors that always need to be modeled: token allocation and release schedule. The total token supply is allocated on different segments like public sales, staking rewards, liquidity pools or even advisors, and these tokens are locked for a certain period of time until they start to be issued according to a release schedule. This has to be modeled as it will have a direct impact on the circulating supply and indirectly on the token price.

Outputs

The results obtained through the Multi User Model vary depending on the requirements of the team. However, these are some of the essential KPIs that have to be estimated:

- Tokens Circulating Supply

- Token Price

- NFTs Circulating Supply

- Total Value Locked

Best practices

When working on Multi User Models, it is advisable to adhere to the following guidelines:

- Build this model only if you need to have an overview of the economic KPIs after evaluating the interactions of all personas. Sometimes Single User Models are enough to perform a thorough assessment

- Use Live Market Feed custom variables to convert the token price to the desired currency

- Focus only on the aggregation of the results of the Single User Model and how the agency of all users can affect the economy. Exclude from this model all features that have already been modeled in the Single User Model

Step 3: Simulate Scenarios

Once the Machinations models have been reviewed and validated with the team, a series of predictions have to be executed to study how the platform works under different scenarios. The goal of this step is to analyze as many scenarios as possible to detect any possible risks that may have an impact on the economy.

Defining Scenarios

Depending on the project’s goal, a set of different scenarios have to be analyzed, considering these rules:

- The scenarios should focus on the behavior of all of the actors that interact with the platform

- Any platform issue or security attack should not be considered as a scenario

- To test different scenarios, it would be recommended to create custom variables, where each scenario is a combination of values assigned to each variable. For instance, the number of active users, amount of tokens staked or number of matches played per day can be parameters that may be tweaked to test a variety of scenarios

- If only the Single User Model has been created, the scenarios should be defined based on the platform parameters, such as rewards given to users, upgrade costs, NFTs costs and so on

- If the Multi User Model has been built, the scenarios will be associated with the behavior of all of the actors, like number of users, sell or buy pressure or tokens traded per day

Scenarios examples

- Base scenario: this case represents a standard situation with no extreme events. All of the parameters are set to the initial values defined by the project or assumed as normal

- Trading volumes: several scenarios can be tested by analyzing extremely low or high amount of users that trade tokens or NFTs. It is important to define as parameters both the number of users that trade tokens as well as the volume of each transaction

- Active users: it is important to analyze the impact that a high or low number of active users has on the economy of the platform

- Staking: to analyze the impact that staking may have on the circulating supply and the token price, several scenarios are recommended. This may include low or high number of users staking, low or high number of tokens staked, or no staking at all

Step 4: Assess risks

The results of the predictions run for all scenarios can be used to detect any risks, which have to be classified considering their likelihood and impact on the game economy.

Scenarios, probabilities and impact

Basically, a risk can be defined as the likelihood of an event to have an impact on the project (either positive or negative) affecting its ability to meet the team’s objectives. This implies that the stakeholders should determine a clear set of objectives that have to be met, and any deviations from them may represent a risk.

A generic form of writing an economic risk for this framework is:

If <Event> occurs then <Impact>

Therefore, each risk is a combination of three aspects:

- Event: it is a condition or situation that may materialize and affect the project

- Probability: it represents the probability of the event to occur

- Impact: effects that the materialization of the event can have the project

Risks can be classified into:

- Qualitative: flaws in the implementation or the design of the platform and its impact on the economy. These can be identified by an analysis of the project’s documentation

- Quantitative: KPIs and values that are outside of expected or desired thresholds. These can be obtained by running predictions for different scenarios with Machinations

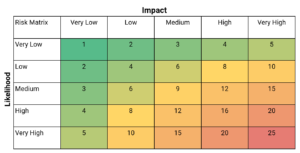

Risk Matrix

All scenarios can be analyzed with a risk matrix which shows the probabilities as rows and the impact as columns, where each probability-impact combination represents a risk. The next matrix is an example of how you can classify risks depending on their likelihood and their impact:

Each attribute of the table follows these guidelines:

Likelihood

This corresponds to the probability of the event to occur, which can depend on many factors such as:

- Number of scenarios or simulations where the risk was detected

- How easy it is for the risk to materialize

- Number of users affected by the finding or event

- Current mitigation measures applied to solve the issue and their effectiveness

Impact

The impact symbolizes the consequences and effects of the risk in the economy if it materializes. It will be generally in terms of the three pillars of good economy design:

- Currency stability: tokens are kept within certain bounds of value

- Price rationality: token prices should match what the users expect or want them to be

- Proper allocation: goods are distributed among people in a fair, reasonable manner

Risks

Risks are a result of each possible combination between probability and impact, multiplying the respective values of each column and row

Risks can also be classified depending on the results of all the combinations in the matrix:

- Very Low/Low: these are acceptable risks, and represent situations where either the likelihood or the consequences are low. It may be a problem because there is a deviation from the actual goals, but the impact is negligible.

- Medium: these risks comprise events where the impact or probability can be such that some goals are threatened but are not critical. These events are likely to occur and their consequences are moderate.

- High/Very High: these risks include events that are likely to materialize and whose impact can be serious, leading to important financial losses or severe decay of active users. A mitigation plan should be prepared to deal with these risks as quickly as possible to prevent any damage.

Risk Assessment Process

With the information gathered throughout the assessment, particularly after predictions were run on the models, it is necessary to detect any risks. The next document contains a list of suggested risks that may be encountered during the assessment with their associated impact:

Web3 Economy Assessment Findings and Risks

The analyst has to follow the next procedure for each one of the events in the spreadsheet to evaluate the final score given to a project:

- Identify risks: the analyst has to go through the risks listed in the sheet “Risks and Impact” and check if these events have been detected

- Analyze risks: for each risk that was identified, it is required to assign its probability and impact by giving a value to the columns “Likelihood” and “Impact” from “Very Low” to “Very High”.

- Evaluate risks: the spreadsheet will automatically calculate the risk severity of each finding depending on the values assigned to the likelihood and the impact. Once all risks have been analyzed, the sheet “Final Score” contains the overall score for the project.

The purpose of the risk assessment process is to evaluate the sustainability of the economy of the project, so the acceptable scores depend entirely on the analyst decision.

Were any risks identified, the analyst should work together with the team to ensure that any measures are taken to mitigate the risks. This may imply starting the process again by making changes to the models, running predictions and studying the impact of these changes on the economy.

Final Remarks

Analyzing the sustainability of a tokenomics project (or any other type of project) can be done in various ways depending on the allotted time, budget, stage of the project or urgency. The process outlined in this article is generic, applying to any Web3 platform whose economy needs to be evaluated. Therefore, some steps may be omitted, reorganized, or reiterated. The preliminary analysis shows only the minimum documents and information necessary to carry out the assessment, although the more information about the project, the better. It is also crucial to have periodic meetings with the team to validate the models and any hypotheses that might have been made.

The main purpose of this process is to help the team detect any issues beforehand and help prevent or mitigate them if the risks materialize. It is recommended to define a team member responsible for the follow-up and monitoring of the risks.

Finally, the assessment should not be a one-time or static process. If the platform is already live, the process should be implemented to conduct LiveOps and detect any issues with real data. On the other hand, if the platform is not live, the assessment should be performed or updated with any new feature that can have an impact on the project’s economy.